Virtual Port Channel master really does begin with a knowledge of the components that make them up. This post assumes you are familiar with the very basics of virtual port channels – knowledge you have gained through CCNA/CCNP Data Center.

Here are the components of the vPC:

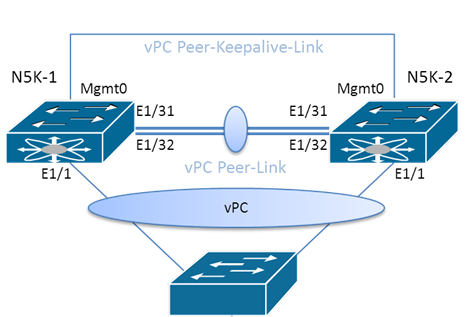

- vPC peers – two switches that act as a single logical switch to the downstream device.

- vPC peer link – a link between the vPC peers that is used to synchronize state. Consider two physical links in a port channel. MAC address table synchronization, as well as other control plane, functions synchronize over this link.

- Cisco Fabric Services – this protocol is responsible for synchronization between the peers. CFSoE is run. STP is modified to keep the peer link ports forwarding.

- vPC peer keepalive link – Layer 3 communication link between the vPC peers to act as a secondary test of connectivity.

- vPC – the virtual port channel depicts itself to the downstream device as a single logical switch. The downstream device does not need virtual port channel support. It forms its standard port channel configuration.

- vPC member ports – a member of the vPC on the vPC peer switch.

- vPC domain – a numeric identifier for the vPC domain.

- Orphan device – a device that is connected to only one peer in the vPC.

- Orphan port – the switchport that connects to an orphan device.

- vPC VLANs – the VLANs permitted to use the vPC. They must be permitted on the peer link.

- Non vPC VLANs – the VLANs not permitted on the vPC.